Risk-Sensitive Autonomy

With the rise of autonomous systems being deployed in real-world settings, the associated risk that stems from unknown and unforeseen circumstances is correspondingly on the rise. In particular, in safety-critical scenarios, such as aerospace applications, decision making should account for risk. For example, spacecraft control technology relies heavily on a relatively large and highly skilled mission operations team that generates detailed time-ordered and event-driven sequences of commands. This approach will not be viable in the future with increasing number of missions and a desire to limit the operations team and Deep Space Network (DSN) costs. Future spaceflight missions will be at large distances and light- time delays from Earth, requiring novel capabilities for astronaut crews and ground operators to manage spacecraft consumables such as power, water, propellant, and life support systems to prevent mission failure. In order to maximize the science returns under these conditions, the ability to deal with emergencies and safely explore remote regions are becoming more and more important.

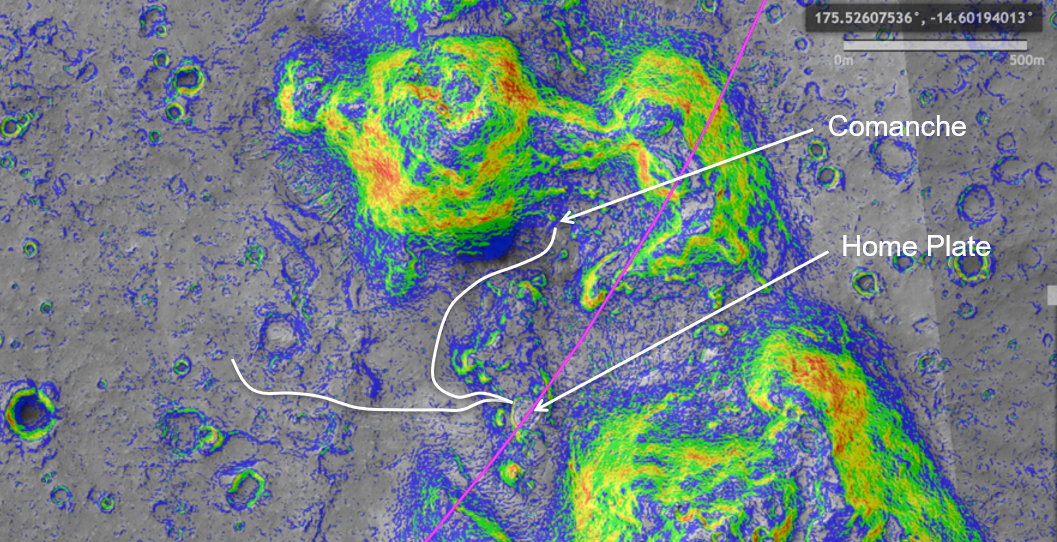

For example, in Mars rover navigation problems, finding planning policies that minimize risk is of utmost importance due to the uncertainties present in Mars surface data.

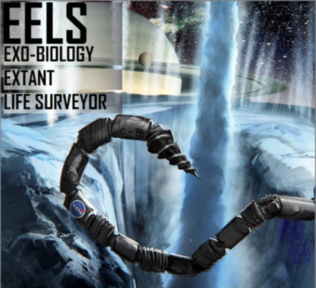

Another application area rises in the context of NASA’s recent Exo-biology Extant Life Surveyor (EELS) project, where an multimodal snake-like robot autonomous robot is tasked with exploring vents of Enceladus (one of the satellites of Saturn).

Enceladus is known to have a subterranean ocean and active geysers. The robot is capable of traversing the surface, descending into the vents, and swimming in the ocean. However, significant uncertainties arise from lack (if any) information about Enceladus’ surface, vents, or oceans. In such scenarios, risk-sensitive decision making is fundamental to ensure mission success.

In both applications above, sensing constraints does not allow for full-state observation and decision making involves partial observation. These problems can be represented as a partially observable Markov decision process (POMDP), where decision making is subject to uncertainty stemming from stochastic outcomes as well as partial observation.

To address these issues, we proposed a method based on bounded policy iteration to design sub-optimal risk-averse policies for POMDPs. Inspired from the operations research and mathematical finance communities, we considered coherent risk measures, e.g. conditional-value-at-risk. More precisely, for a given POMDP , a discount factor , and a total dynamic risk functional composed of a set of coherent risk measures, we are interested in computing a policy such That

where is the initial probability distribution over POMDP states.

Unfortunately, synthesizing risk-averse optimal policies for POMDPs requires infinite memory and thus undecidable. To overcome this difficulty, I proposed a method based on bounded policy iteration for designing stochastic but finite state (memory) controllers, which takes advantage of standard convex optimization methods. Given a memory budget and optimality criterion, the proposed method modifies the stochastic finite state controller leading to sub-optimal solutions with lower coherent risk.

I proposed a method based on bounded policy iteration for designing stochastic but finite state (memory) controllers, which takes advantage of standard convex optimization methods.

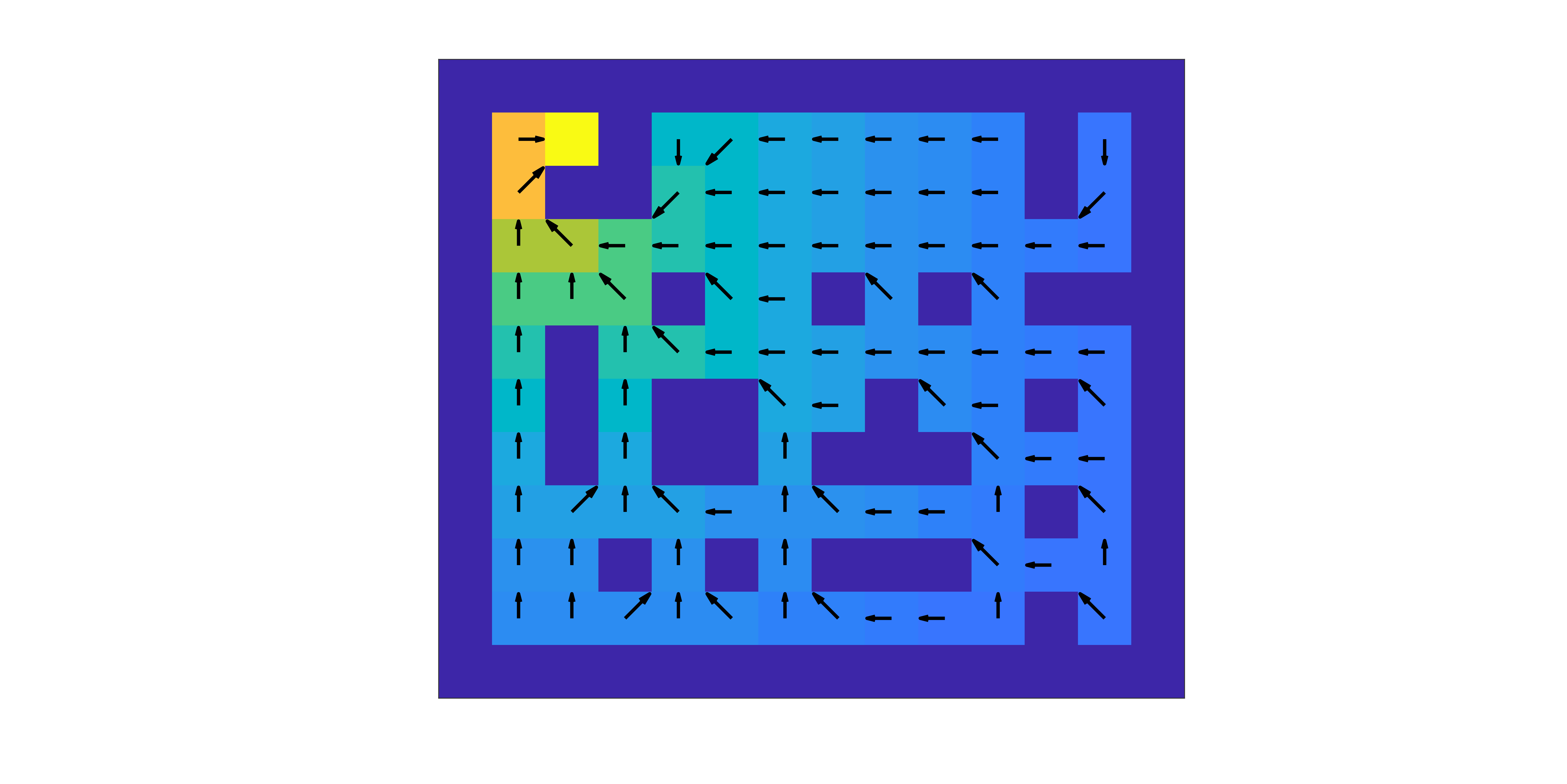

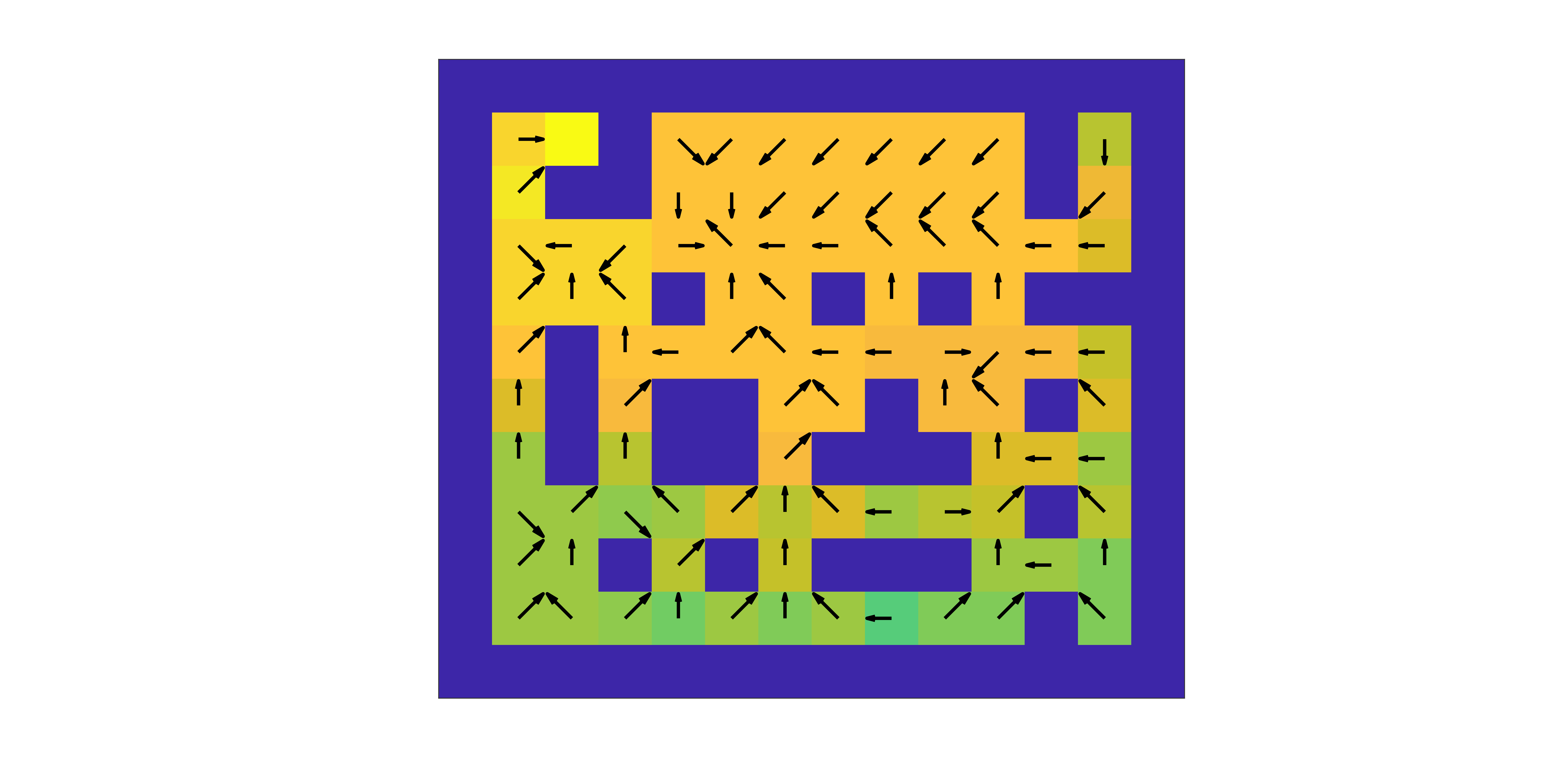

To illustrate, consider an agent (e.g. the Mars rover) that has to autonomously navigate a two dimensional terrain map (e.g. Mars surface) represented by a grid world ( states) with obstacles of different shapes. At each time step the agent can move to any of its eight neighboring states (diagonal moves are allowed). Due to sensing and control noise, however, with probability a move to a random neighboring state occurs. The stage-wise cost of each move until reaching the destination is , to account for fuel usage. In between the starting point and the destination, there are a number of obstacles that the agent should avoid. Hitting an obstacle incurs the cost of leading to termination, while the goal grid region has reward . The discount factor is . After a move is chosen, the observation of the agent is assumed to be binary, i.e., either an obstacle is detected in the next cell that the robot is moving to or not. I included an obstacle and target position perturbation in a random direction to one of the neighboring grid cells with probability to represent uncertainty in the Mars terrain map.

The objective is to compute a safe (i.e., obstacle-free) path that is fuel efficient. To this end, we consider CVaR as the coherent risk measure given by

where is the confidence level.

The numerical experiments show that the risk-neutral policy leads to shorter paths from different cells to the target. However, on perturbed scenarios, it performed poorly with failures. On the other hand, the risk-averse policy leads to longer routes from cells to the target chooses, but it resulted only in failed scenarios. Thus, using a risk-sensitive policy increases robustness to terrian map uncertainty, which can be used for Mars rover planning missions.